On 'Behavior, Purpose and Teleology': Enduring Insights from a Cybernetics Classic

A deep dive into the 1943 paper by Rosenblueth, Wiener, and Bigelow, its historical context, and its continuing contributions to philosophy of science, diverse intelligences and more.

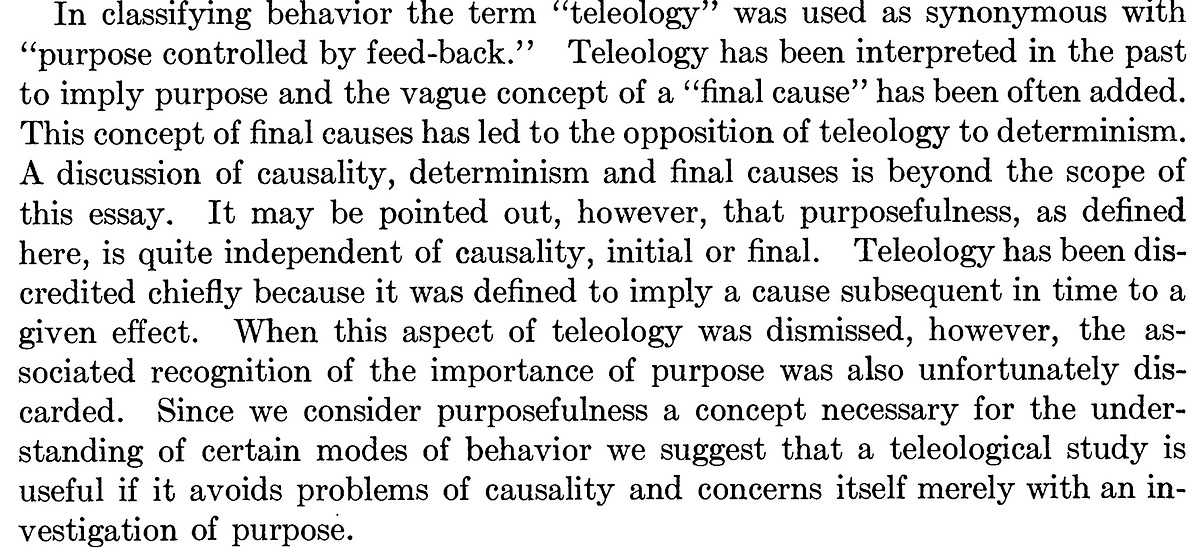

The 1943 paper “Behavior, Purpose and Teleology” was a response to the prevailing scholarly attitudes that dismissed teleological explanations as unscientific and obsolete. By redefining purpose in terms of feedback, Rosenblueth, Wiener, and Bigelow sought to rehabilitate the concept of teleology, striving towards a rigorous framework that could account for goal-directed behavior in both biological organisms and machines.

Through challenging the mechanistic orthodoxy of their time, the authors laid the groundwork for cybernetics and influenced the development of multiple disciplines that continue to explore the intricate balance between mechanism and purpose in complex systems. We will take a deeper dive into the context and content of the paper in this discussion.

Motivation Behind The Paper

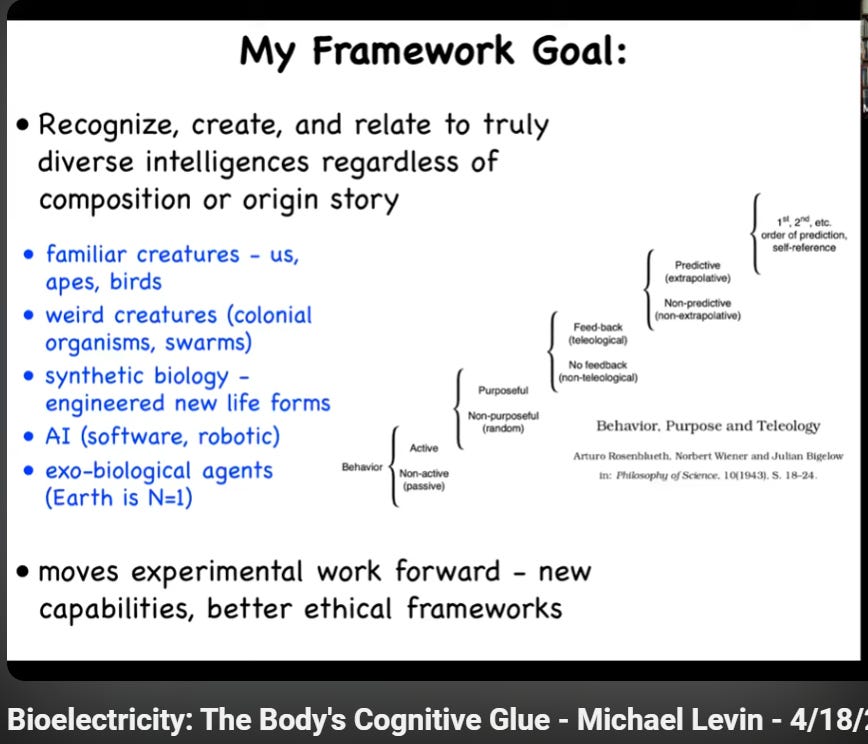

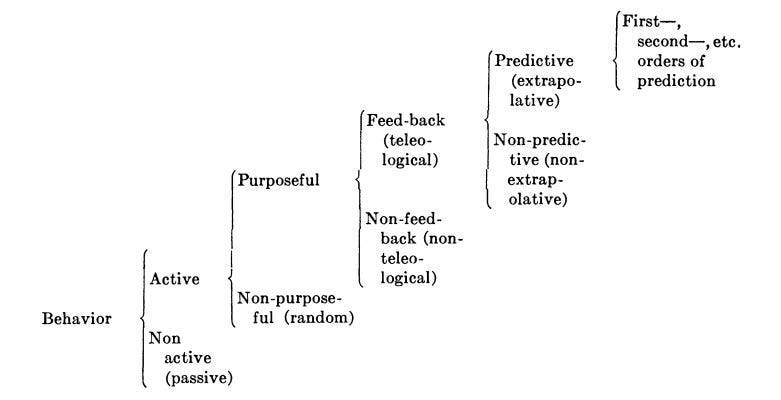

1: Selecting for predictive, complex behavior

The authors acknowledge their categorical classification is generically made so as to filter out the non-class; they also remark on how other divisions are possible to varying ends. Bradly Alicea discusses this in the context of predictive processing vs. enactivism, and also a deeper dive on this paper during a recent Saturday Morning Neurosim meeting.

Prediction is seen as interesting as it allows for differentiation of potential “orders” of predictive behavior; the authors mention various examples of how complex or multi-dimensional certain actions may be, as we discuss further below.

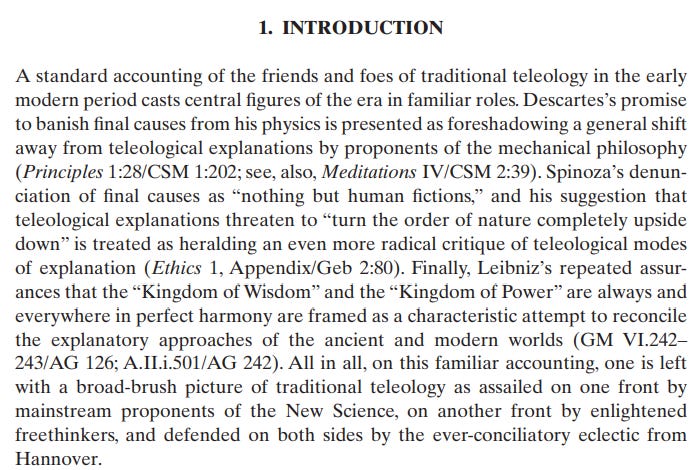

2: Historical context in reclaiming a teleological discourse via secular pivot

“Teleology” has been debated in ethical, causal and philosophical traditions for well over two millennia. Causation at large, and the concept of efficient cause in specific, transformed substantially during the Scientific Revolution (1540s-1680s), with efficient cause shifting from an integral part of a teleological framework to a central tenet of mechanistic philosophy. This shift not only redefined how natural phenomena were understood but also established new methodologies that would shape modern science, prioritizing observable interactions over inferred purposes.

Following the Scientific Revolution, the Age of Enlightenment saw considerable movement away from Scholasticism, which dominated education in Europe the since the 12th century; mechanism would come take center stage, though not without its contention, alongside evolving Deist thought. William Paley’s divine watchmaker justification for intricate complexity would be countered by Immanuel Kant’s Critique of Judgement, confronting mystical design while allowing room for teleological concepts in interpreting biological phenomena; whereas Charles Darwin would further challenge traditional teleological reasoning with his development of natural selection and evolution.

Also of note, Maxwell’s Demon, introduced by James Clerk Maxwell in 1867, serves as a thought experiment that challenges the second law of thermodynamics; whereas 10 years after the publishing of the 1943 Rosenblueth et al paper, Francis Crick (with collaborators) would elucidate the structure of DNA and continue a wave of mechanistic, purpose-less focus. (See Bradly’s commentary during a recent Cognition Futures discussion.)

3: Striving for a lens of analysis across natural and artificial agents

Through the framing of feedback relative to a goal, and how behaviors can be studied from this vantage point, the authors sought to demarcate a way of studying behaviors beyond conventional segregation between biological and artificial.

From our present-day viewpoint, we can point out a more robust study of agential activities that would follow: complexity science, agent-based, neuro-inspired AI, or even aspects of the cognitive science project at large. But at the time, system, information, and control theories were still very much in development. A non-reductionist take on behavior, whose domain allowed for constructed or naturally emerging agents to be seen from a uniform view, was a broader aim, and taken up by the authors and their decedents on many fronts; “Cybernetics: Or Control and Communication in the Animal and the Machine” would be published five years later by co-author Norbert Wiener.

Assertions within the paper

In the sections below, we view some of the nuggets from within the paper itself, which range from theory and philosophy — including the relationship of feedback to purposeful, voluntary behavior — to discussing what makes the grouping of machines and organism of substance now, and how that may change ahead.

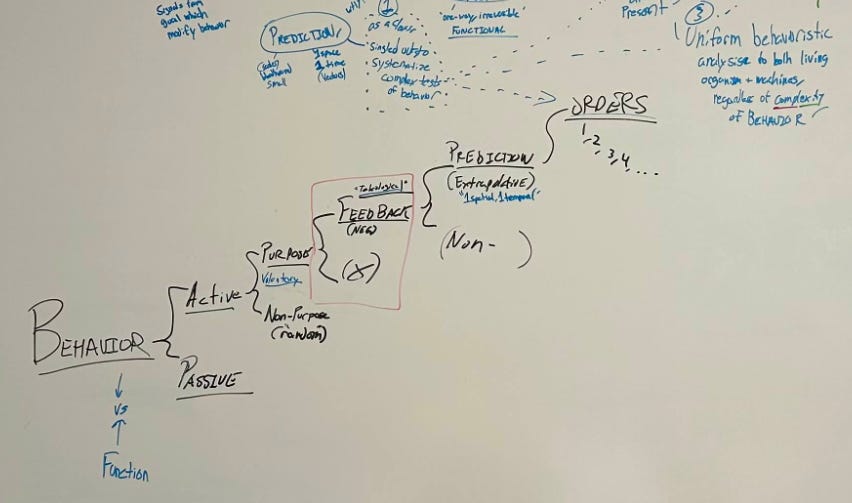

Behavior vs Function

The paper begins by noting that their take on behavior specifically omits structure and intrinsic organization:

In a functional analysis, as opposed to a behavioristic approach, the main goal is the intrinsic organization of the entity studied, its structure and its properties; the relations between the object and the surroundings are relatively incidental. From this definition of the behavioristic method a broad definition of behavior ensues. By behavior is meant any change of an entity with respect to its surroundings.

In discussing inputs and outputs, the authors note that their use of behavior would be far too wide in scope, unless it is restricted, unless it is classified in some form. This leads to their ultimate justification of their diagram, which is intended to allow for classification of increasingly complicated behaviors through the nature of their predictive, goal-oriented actions.

Purposeful behavior is voluntary

The authors discuss that what is selected is not the minutia or components of moving the body in the world towards a goal, rather it is the goal itself that comprises the voluntary selection associated with purpose.

“When we perform a voluntary action what we select voluntarily is a specific purpose, not a specific movement.”

Regarding the idea that “all machines are purposeful” — this is said to be “untenable.” In the view of the authors, a clock itself is not purposeful, in spite of being designed with a purpose in mind. But in terms of how purposes is used here, there is no final state to which the clock is aspiring towards [*].

Teleology as purpose controlled by negative feedback

Here, we see the authors further staking their claim relative to functionality, by not only showing the significance of an agent’s relation to an environment, but also that signals “from the goal” are necessary as well.

If a goal is to be attained, some signals from the goal are necessary at some time to direct the behavior.

Negative feedback, then, offers this corrective measurement, influencing the outputs from the agent relative to achieving the goal.

Positive feed-back adds to the input signals, it does not correct them. The term feed-back is also employed in a more restricted sense to signify that the behavior of an object is controlled by the margin of error at which the object stands at a given time with reference to a relatively specific goal. The feed-back is then negative, that is, the signals from the goal are used to restrict outputs which would otherwise go beyond the goal. It is this second meaning of the term feed-back that is used here.

Additionally, a reference is made to cerebellar patients (or those with motor control trouble) and associate this to a machine’s flawed actions if it has undampened feedback. The task of drinking from a cup is employed to show how negative feedback relative to the goal (is the cup increasingly closer to the mouth, is the cup being positioned such that water can be imbibed) is what is being selected for; but in the case of the cerebellar patient, oscillatory patterns of movement increase without effective check and constraint, which in turn spills the water and inhibits the achievement of the goal.

So, if positive feedback amplifies the input signals without affording correction, and undampened feedback distorts the signal being received from the goal-state, properly functioning negative feedback is to be seen as inseparable from the realization of a voluntary, intended goal or end state.

Prediction, extrapolation, and coordinates

The authors differentiate through examples of complexity in organisms behavior, and in noting predictive and non-predictive servomechanisms. Unicellular organisms are non-predictive; a cat tracking where a mouse will go next is predictive.

Prediction is differentiated as requiring at least two coordinates, with one of then mandatorily being time, and at least one spatial coordinate. The authors note that the “sensory receptors of an organism, or the corresponding elements of a machine, may therefore limit the predictive behavior”, with key difference described in how a bloodhound tracking a scent is not predictive; as it is only measuring the change in magnitude of one dimensionality[**].

Contrasting via causality: Beyond historical context, the authors carefully differentiate prediction from causality, whereas causality is designated as “one-way, relatively irreversible”, and functional in relationship. Teleology and causality share only the time axis, without sharing the relation to the environment and the inherent selection of a goal that purposeful, teleological behavior necessitates.

On future engineering, grouping Machines and Organisms together, and EGRT

The final pages reveal the authors’ vantage point on what is tenable in engineering now, relative to what may become tenable in the future. They contend that the methods are similar and may remain so depends on potential emergence of qualitative differences. (This is interesting to consider regarding what would become an orthodox metaphor in the later-developed field of cognitive science: how the mind itself is a machine, even, a computer.)

Beyond remarks about implications for the means of construction, there is an interesting statement that becomes fodder for many discussions on the nature of biology, models, maps & territory, and the like:

[…] In future years, as the knowledge of colloids and proteins increases, future engineers may attempt the design of robots not only with a behavior, but also with a structure similar to that of a mammal. The ultimate model of a cat is of course another cat, whether it be born of still another cat or synthesized in a laboratory. [p 22]

The notion of modeling or being able to produce a model eventually ties into reference and control. Later in the development of cybernetics and eventual control and systems theories, the 1970 paper “Every good regulator of a system must be a model of that system” by R. C. Conant and W. R. Ashby would bring about a curious set of of ideas regarding the nature of managing states, or, being able to regulate, account for, or perhaps predict behaviors.

So, in the context of our 1943 paper, we can see the vantage point of those attempting to discern what scope feasibly contains natural and constructed agents — but also an attempted disclosure of limitations or suppositions that offer awareness of the means to support the usefulness of such a scope. In doing so, they offer humility about what is accessible in their contemporary era, while surfacing insight around what an entity is comprised of relative to what its behavior is, and how models of either can be of use [***].

(Selected) modern works of interest

Within various reading groups and projects at Orthogonal Research and Education Lab, we’ve come across several ties to this paper. Below is a light sampling of those authors and their works:

Margaret Boden: a British cognitive scientist and philosopher known for her extensive work on the nature of the mind, creativity, and artificial intelligence. In her seminal work, “Mind as Machine: A History of Cognitive Science” (2006), Boden delves into the development of cognitive science and discusses how the mind can be conceptualized as a machine. The book is intended to provide historical development of the field rather than a declaration that the correct approach of cognitive science as reduction of the mind to a mere mechanical device — though it offers that mechanistic explanations can significantly illuminate how the mind works.

Thomas Fuchs: a psychiatrist and philosopher known for his work in phenomenology and the philosophy of psychiatry. In his book, “The Ecology of the Brain: The Phenomenology and Biology of the Embodied Mind” (2018), Fuchs presents a comprehensive critique of mechanistic and reductionist approaches to understanding the mind. Instead, he advocates for an embodied and ecological perspective, emphasizing the interrelation between the brain, body, and environment; his work builds upon the Francisco Varela lineage of more holistic approaches to cognition.

Valentino Braitenberg: the paragraphs preceding the lone figure in the paper have a particular relation to the 1986 book “Vehicles: Experiments in synthetic psychology.” A takeaway from Braitenberg’s work is less about purpose and more that intelligent-seeming behavior can arise from small differences in how an agent is designed to interpret or respond to stimuli; perhaps our authors would see it as a functionality-driven take on complexity behavior. His work would become the inspiration of the Orthogonal Research and Development Lab’s Developmental Braitenberg Vehicles project series, including “Braitenberg Vehilces as Developmental Neurostimiluation”, and continues to lend insight to contemporary matters in cognitive science and related fields.

Michael Levin: a developmental and synthetic biologist known for his research in bioelectricity, morphogenesis, and regenerative biology. This paper is a frequent occurrence in Levin’s presentations, with clear connections to its engineering insights, as well as furthering the aim of grouping organism and machine via the contemporary space of of diverse intelligences. Its concepts also extend to Levin’s work on Xenobots with Josh Bongard, their co-founded Institute for Computationally Designed Organisms, as well as their papers such as Living Things Are Not (20th Century) Machines: Updating Mechanism Metaphors in Light of Modern Science of Machine Behavior. Of a particularly wide-ranging and potentially under-attended matter, Levin frequently footnotes his presentations (and his lab’s website) with reference to the need for modernizing ethical frameworks these implications for this line of work are further realized.

David Krakauer: an evolutionary theorist and president of the Santa Fe Institute, Krakauer appears to envision a form of Complexity Science as the study of “teleonomic matter”, or, theories about things that make theories; or perhaps, the study of purposeful agents. Krakauer’s work centers more on origins of life and less-personal takes on experience, but has developed concepts such as the Information Theory of Individuality. He is particularly interested in understanding how information is processed and transmitted across different levels of biological and social organization, and how this influences the evolution of intelligence and cognition.

Further Reading and Future Writing

To conclude, a few brief comments and associated questions on ideas from our discussions on the original 1943 paper.

Sampling, embodiment, and constraints: In the authors’ depiction of predictive behaviors, there is discussion about the nature of the speed required to process both having a goal and “receiving” feedback from the goal in the environment. There are many deep-seated matters to consider here: with potential implications for the constraints on the domains of affordances; questions about how processing (or sampling) speed affects predictive capacities; and what is the impact of cognitive offloading further on freeing up predictive capacities?

“Signal from the goal” and investigating feedback: if the authors carefully demarcated their purpose-driven negative feedback as a vital part of the agent-goal communication within purposeful behavior, what does this indicate about ability to interpret or perceive such signal?

Prediction of hitting a target, and, predicted impact of language: how does nuance and articulation for directing movement in the physical world relate to nuance and articulation for directive movement in the social world?

Finally, here are some suggested, some intended (in our reading groups!) additional texts and concepts:

“What is Life?” (1944): Erwin Schrödinger’s work explored the physical aspects of living cells, discussing order, entropy, and genetic information. While not a direct critique, it contributed to the discourse on life processes and whether they could be fully explained by physics and chemistry.

Purposeful and Non-Purposeful Behavior, a 1950 response from Rosenblueth and Wiener to Richard Taylor’s critique.

Introduction of “Teleonomy”: To avoid metaphysical connotations, scientists like Colin Pittendrigh developed the term “teleonomy” to describe apparent purposefulness arising from natural processes.

Second-Order Cybernetics: later cyberneticists such as Heinz von Foerster and Gordon Pask emphasized second-order cybernetics, focusing on the observer’s role and self-referential systems. They critiqued early cybernetics for not adequately addressing the complexity of living systems and cognition.

Autopoiesis: Humberto Maturana and Francisco Varela introduced the concept of autopoiesis to describe self-producing, autonomous systems, bringing a new dimension to discussions of purpose in living organisms.

Teleosemantics: Philosophers like Ruth Millikan and Daniel Dennett later developed theories like teleosemantics, which attempt to naturalize teleology by explaining intentionality and mental content in evolutionary terms. Also, Dennett introduced the idea of treating entities as “intentional systems” for predictive purposes, which relates to attributing purpose in a pragmatic sense.

Footnotes

[*] — This offers some interesting questions on the topic of the “discrete vs. continuous”, perhaps suggesting that a clock’s continuous motion disqualifies the achieving of a discrete outcome. Could one argue that the clock is designed to continuously advance to the next second of activity, thereby is a “loop” of discrete actions? What the authors are getting at in this paper, and with the goal of purposeful, teleologic, predictive behavior, seems to be focused elsewhere, reasonably so. The clock does not solicit feedback from its environment as to differentiate the selection of actions relative to a desired outcome. This is a useful point to consider the vantage point and domain the authors are demarcating. It’s also a particularly salient reference to the divine watchmaker argument, further distancing mystical implications for purpose, and instead seeking to highlight an agent’s selective capacity.

[**] The multiple dimension requirement for prediction is interesting to consider in light of Braitenberg Vehicles. Braitenberg likened the simplistic nervous system set up as perhaps demonstrating emotions, but it is clear the vehicles do not themselves predict an outcome nor seek an end state. Relating this to the above example of a clock offers an interesting juxtaposition, one that may fit well with David Krakauer’s use of the term “teleonomic” matter or agents, and intentions for advancing complexity science.

[***] The phrasing of modeling and cats is still used in contemporary discussions, particularly regarding biology or organismic phenomena and the theories used to parse them.

Having just finished the original paper, I found this article helpful. You bring out background issues that explain some of their passion.

thanks for htis one....going throguh some old posts now and could see this having more use in recent cybernetics areas