Why Can Crows Use Sticks But Robots Struggle With Their Own Bodies? Natural v Artificial Embodied Intelligence

Notes from Foresight Institute's discussion with Michael Levin, Josh Bongard, and Tarin Ziyaee

The Scene is a collection of posts around various events in the innovation, AI, and human-technology interface space that I frequent. These posts are less about in-depth analysis and more for coverage of related ideas and topics as their public-facing discussions emerge.

BOSTON | VIRTUAL - SEPTEMBER 20, 2025

A crow can pick up a stick and use it as a tool on its first try. A monkey can learn to drive a car. You can operate a spoon, a piano, a drone—devices with no precedent in your evolutionary history. Yet state-of-the-art robots struggle to adapt their control even to their own bodies, let alone extend it to unfamiliar tools.

This is the puzzle that opened yesterday’s1 Foresight Institute Neuro Virtual Seminar, where Tarin Ziyaee, Michael Levin, and Josh Bongard gathered to explore the gap between embodied natural intelligence and our artificial attempts to replicate it. The question driving the discussion: Why do biological systems excel at the very thing modern robotics finds most difficult—flexibly extending control through uncertain, changing environments and novel tools?

Over a fast-moving 45 minutes, these researchers dismantled comfortable assumptions about what intelligence is, where boundaries between self and world lie, and why our engineering paradigms keep missing something fundamental. What emerged wasn’t just a critique of current robotics, but a roadmap for rethinking how we build intelligent systems from the ground up.

Discussion Overview: Core Themes

Intelligence Beyond 3D Space

Levin opened by challenging our spatial bias—our tendency to recognize intelligence only in medium-sized objects moving through three-dimensional space. Biological intelligence operates across multiple high-dimensional spaces: physiological state space, metabolic state space, gene expression, and anatomical morphospace. Morphogenesis itself is behavior—collective intelligence navigating anatomical possibilities. When cells coordinate to build a body, they’re executing policies analyzable through behavioral science, not just following deterministic programs.

The Unreliable Medium Advantage

A key insight: biology’s flexibility emerges from spending eons with unreliable components. Living systems cannot assume what will happen—even their own parts are unpredictable. This forces a focus on problem-solving capacity rather than memorizing specific solutions. Tadpoles with eyes grafted onto their tails can see immediately, with no additional evolutionary selection needed. The same genome produces humans or anthrobots2—different ways of being emerge from the same toolkit because the system excels at “doing the best it can with what it has.”

The Plasticity of Self

Bongard emphasized that biological systems maintain fundamentally plastic boundaries between self and world. An infant doesn’t know which actuators belong to it—the reliable food-bringing entity (parent) initially seems like part of the body. This plasticity stands in stark contrast to robotics’ industrial heritage of maximizing certainty and ensuring identical operation across iterations.

Biology achieves “machines within machines within machines”—competent agents all the way down. When an autonomous vehicle encounters an out-of-distribution event, it has no plan B, no other cells inside to turn to. The rigidly defined self becomes a liability.

Imitation Learning’s Fundamental Flaw

The discussion of imitation learning revealed a deeper issue: the paradigm starts with answers (copy these motions) rather than questions (what do I need to do to reach this goal?). Natural systems constantly run micro-experiments with the world, spawning and killing sensory-motor programs, pinging the environment to understand what works.

Current robotics approaches are motion-centered and passive—the learner and teacher are pre-defined, the interesting problems already solved. Bongard raised a provocative question from his earlier work with psychologist Andy Meltzoff: “Who is my teacher?”, leading to the insight of “you’ve already solved the interesting part of the problem for the learner.”

So, what yeah what to imitate and who is doing the imitating? So sorry to bring this back to the plastic self right it’s again the assumption is often “there’s a robot and maybe it’s c learning to copy other robots and other humans.”

I co-authored a paper3 with Andy Meltzoff a developmental psychologist years ago now about “Who is my teacher?” and it’s been so long I’ve forgotten everything about that paper except that question right?

Again like if you don’t clearly have a good boundary it’s not so clear who to learn from the subject and object the learner and the teacher it’s not so clearcut.

And in machine learning and re imitation learning as you mentioned it’s clear the robot is given the learner is given the teacher it’s all set up for them it’s a very passive process; you’ve already solved the interesting part of the problem for the learner.

And so yet again, there’s an example about how if we go in with an incorrect framing, we might not get what imitation learning was originally designed to do.

(Transcript from Foresight Discussion)

Memory Remapping + Zero-Shot Transfer

Levin teased forthcoming work on what he called “zero-shot transfer learning”—datasets with no apparent relationship to a problem somehow containing actionable intelligence for solving it. The biological precedent: caterpillar-to-butterfly transformation4. The caterpillar learns to crawl toward leaves in response to light stimuli. After dissolving most of its brain in the cocoon and rebuilding a completely different architecture for flight, the butterfly retains this learning—but remapped. None of the specific memories matter (butterflies don’t crawl or eat leaves), yet the information persists in transformed form.

This illustrates a crucial principle: “It’s never a tape recorder, it was always messages that we are re-interpreting to see what you can do with it.” Levin suggests biological learning involves constant reinterpretation of memory, telling yourself the best story you can about what your own memories mean.

Polycomputation and Hidden Biology

Bongard introduced polycomputation5—the concept that vibrations across multiple frequencies in biological tissue perform computations simultaneously at the same place. Neurons and body parts compute across the frequency spectrum, something “we’ve only just started to look for.” This represents potential avenues for bio-inspired technology we haven’t yet explored.

The discussion also highlighted our “data starvation” problem: while computer vision dominates robotics research (legacy of the 2012 AlexNet revolution), real-world agents must deal with mechanical forces—object-on-object interactions for which we have no equivalent of ImageNet. We may not even know what kinds of data embodied intelligence requires.6

The Reliability Paradox

When asked about reliability, the panelists reframed the question: Biology is reliable, but at the level of maintaining consistent narratives,7 not consistent geometry or timing. It’s the Ship of Theseus problem—focus on keeping “the ship” reliable, not the planks. Current robotics fixates on proximate causes (the physical body) rather than ultimate causation (what we actually want to happen).

The hard bargain: achieving truly adaptable robots8 means surrendering industrial certainty and tolerances.9 We cannot have both completely open-ended systems and systems that do exactly what we retrospectively want—there’s a Pareto frontier requiring difficult choices.

Practical Implications

For those entering this space, Bongard pointed to his book with Pfeifer, How the Body Shapes the Way We Think, and his lab’s Ludobots subreddit—programming projects easing people into robotics, neural networks, neuroscience, biomechanics, and physics engines.

The path forward involves “metarobotics”—admixtures of control policy and physical hardware at every scale, from individual components with their own intelligence up to cloud-deployed fleets learning collectively. The future isn’t a six-foot metal body with a GPU brain because that division of self is arbitrary, born from human bias rather than principled design.

The central message: We must remain humble before biology’s lessons, avoid enforcing predetermined frames, and recognize that truly capable robots will be agents with intrinsic motivation—not old-school machines we completely control.

A few additional notes from the panelists:

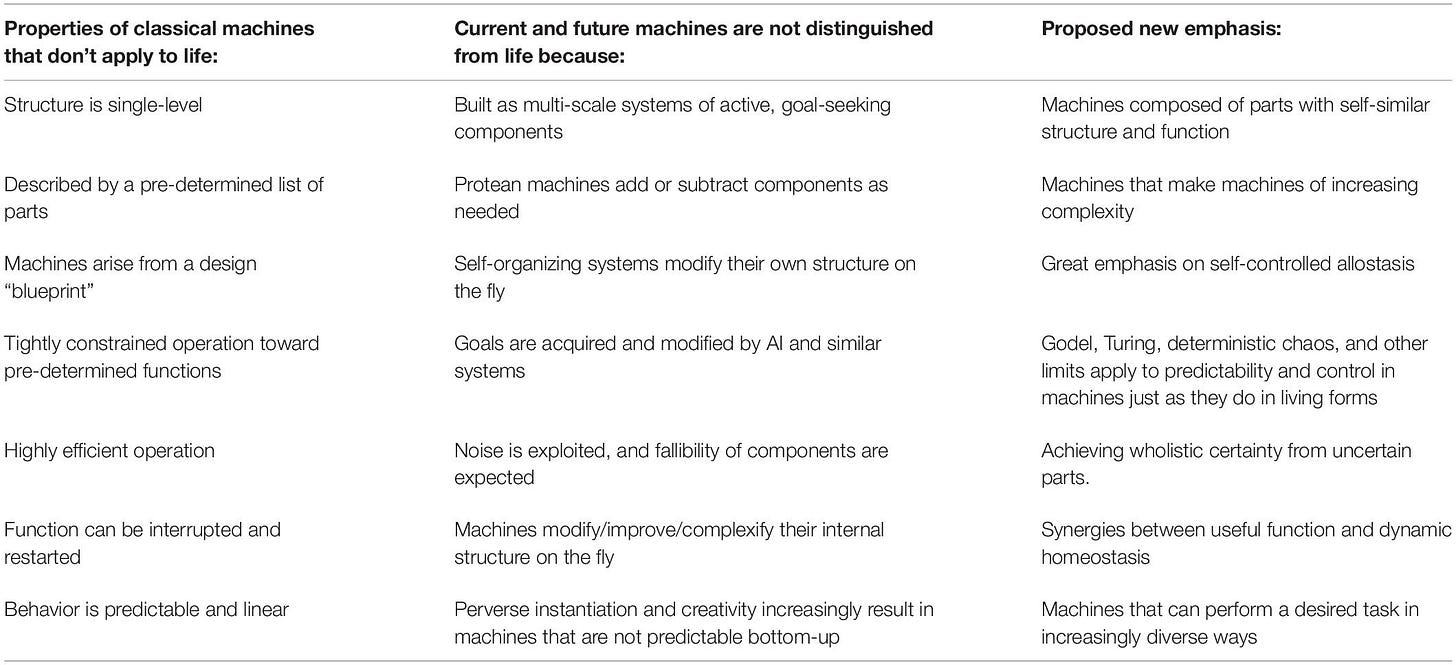

Living Things are not “Machines”

After a solid bit of banter between Bongard and Levin, I made a cheeky comment in the chat about it sounded like they were saying “Living things are not (20th century) machines,” which was reference to a 2021 paper by the same pair.

We had also covered paper previously during a 2024 meeting of the Cognition Futures Reading Group, as hosted by Orthogonal Research and Education Lab.

The subtitle of the paper reads “Updating Mechanism Metaphors in Light of the Modern Science of Machine Behavior,” and offers a call to revisiting the language around how we demarcate or label that which generates behavior.

…or are they?

My comment received several reactions, and a follow up reply from Levin, where he linked his latest take on the topic: “Living Things Are Not Machines (Also, They Totally Are)” The March 2025 piece was featured in Noema Magazine, but it’s worth checking Levin’s personal site with additional commentary.

He states early in the article:

Thus, my quarrel with LTNM is not coming from a place of sympathy with molecular reductionism; I consider myself squarely within the organicist tradition of theoretical biologists like Denis Noble, Brian Goodwin, Robert Rosen, Francisco Varela and Humberto Maturana, whose works all focus on the irreducible, creative, agential quality of life; however, I want to push [Technological Approach to Mind Everywhere] this view further than many of its adherents might. LTNM must go, but we should not replace this concept with its opposite, the dreaded presumption that living things are machines; that is equally wrong and also holds back progress.

He clarifies:

Many who support LTNM never specify whether they mean the boring 20th-century machines, today’s quite different artifacts, or the fruits of all possible engineering efforts in the deep future. By failing to answer the hard question of defining what a “machine” is — they neglect a point at the core of their claim.

For an overall brief on the piece: Levin argues that the debate over whether “living things are not machines” is rooted in two false premises: that we can objectively define what something fundamentally “is,” and that our formal models capture the complete story of a system’s capabilities.

He proposes instead a pluralistic, pragmatic approach—recognizing that all our descriptions are metaphors suited to specific contexts and purposes, not universal truths.

Rather than ask whether biology or engineered systems “really are” machines or minds, we should empirically test which conceptual toolkits (mechanistic, cognitive, cybernetic) prove most useful for predicting, controlling, and understanding different systems.

The key insight: nothing is fully encompassed by our models—not living things, not “simple” machines, not even basic algorithms—and the surprising emergence of proto-cognition may appear in unexpected places if we abandon rigid categories and remain humble about the limits of our current understanding.

Tangent: Thoughts on Bitter Lessons

A general sidebar came up following the discussion: “Is this somewhat congruent to Richard Sutton’s Bitter Lesson, perhaps indicating the ‘maps’ are indeed limited, vantage-point & context specific, rather than the more whole, more robust space of behaviors in general?” It led to these thoughts:

There’s a strong parallel here. Sutton’s Bitter Lesson essentially argues that hand-crafted, human-knowledge-based approaches in AI consistently lose to general-purpose learning methods that leverage computation. The “maps” we build—our clever representations, domain knowledge, and engineered features—turn out to be less powerful than simply searching/learning over larger behavioral spaces.

Levin’s argument maps onto this in several ways:

The Core Alignment: Both reject the idea that our current formalisms capture the territory. Sutton says our domain-specific insights are less valuable than we think; Levin says our categorical distinctions (machine/life, cognitive/mechanical) are projections of our limited models, not ontological truths. Both advocate for humility about what our “maps” reveal.

Where They Diverge: Sutton’s lesson is somewhat eliminativist—throw out the hand-crafted knowledge, scale up learning. Levin’s pluralism is more expansive—we need multiple maps/metaphors simultaneously, each revealing different aspects of the behavioral space. He’s not saying “abandon mechanism for cognition” but rather “use whichever lens generates useful predictions for your context.”

The Behavioral Space Insight: Your framing is apt: the “space of behaviors in general” is richer than any single map suggests. Levin’s examples (caterpillar memory remapping, xenobots, tadpoles with ectopic eyes) show biology accessing this space through mechanisms our models don’t predict. Similarly, Sutton’s point is that the actual space of effective behaviors discoverable through search vastly exceeds what human intuition engineers into systems.

A Tension: Sutton implies there’s a privileged approach (scale + search). Levin’s pluralism resists this—there’s no single “right” way to interact with complex systems. So while both critique overconfidence in our maps, Levin might push back on the idea that any one methodology (even massive-scale learning) will capture the territory better than context-dependent tool selection.

An attempt at synthesis: Our formal models are always partial, context-dependent compressions. The question isn’t which map is true, but which maps unlock useful interactions with the underlying behavioral manifold.

Teleology, ever lurking

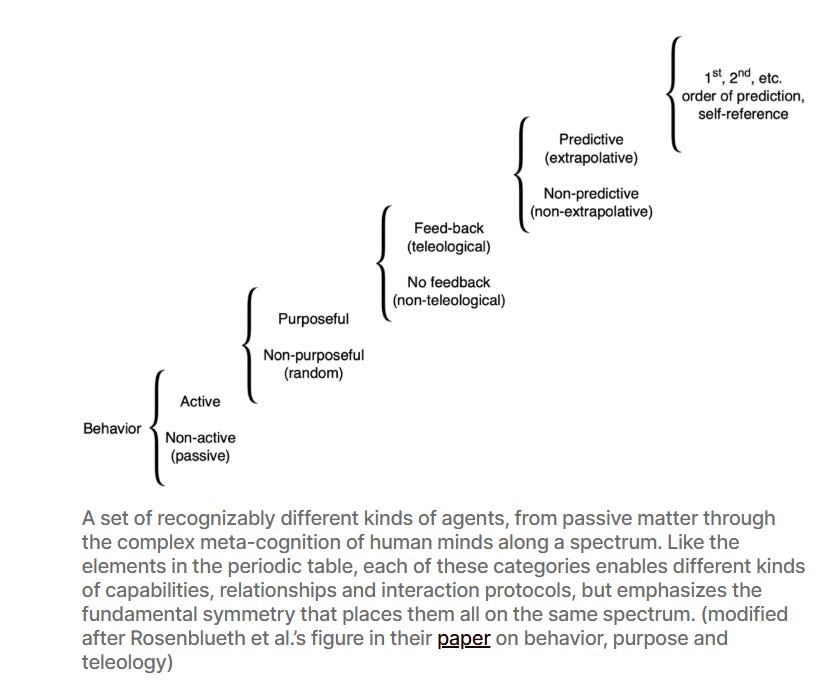

Further in the Noema article, we find a frequent citation of Levin, the figure from Rosenblueth et al’s 1943 paper on behavior, purpose, and teleology. I admire Levin’s effort to trace back to this paper from over 80 years ago, seeking to better realize the authors’ aims to develop a more robust take on how behaviors emerge.

You can read my breakdown of the same paper here:

Josh Bongard

Levin had to depart about halfway through, and then Bongard held center stage and answered a few questions. Outside of the above summary, I want to highlight a few points for future reference:

Thomas Fuchs mention

The conversation shifted just as I mentioned Thomas Fuchs Ecology of the Brain in chat, so I’ll never know what Bongard was going to say. It came up in the context of “whole-organism” activities, and restructuring investigations to be able to do so.

Footnote #5, which features Bongard, Levin, and Thomas Froese—who is friendly to and perhaps more ready to materialize some of Fuchs’ insights, anti-reductionism, and alluded-to-but-not-explicated methodological hopes—may reveal contain relevant discussion for this arena.

Q&A “How to get started in this space?

An audience member asked about where to begin for those curious in Bongard’s work.

Noting it was outdated relative to modern AI, but that its core ideas remained relevant, Bongard mentioned How the body shape the way we think: a new view of intelligence.10 For those interested in coding and developing simulations representing some of these insights, he mentioned his lab’s Subreddit: r/ludobots. He gives an overview:

There’s a whole bunch of programming projects there that ease you into the shallow end of all the nuts and bolts of robotics, neural networks, a little bit of neuroscience, a little bit of biomechanics, a little bit of physics engines, the kind of tools that tend to be used to tackle these sorts of questions.

And yeah, you can just follow those programming projects. And they’re designed so that when you get to the end of one of those paths in Ludbots, you’ve got a platform, an experimental platform, and then you can hopefully have enough intuition about how to take that platform and use it to test out some of your own ideas.

That’s it for this really interesting discussion — thank you to The Foresight Institute for hosting this virtual salons, and in particular to Lydia La Roux and Tarin Ziyaee for moderating and hosting. I’m particularly curious if any readers have used ludobots coursework, or read Josh’s 2006 book, among the many other references mentioned.

Stay tuned for more and follow The Scene section of my newsletter for more event recaps and notes.

Cheers, J.

Gumuskaya, G., et al. (2023). “Motile Living Biobots Self-Construct from Adult Human Somatic Progenitor Seed Cells.” Advanced Science. The anthrobots paper.

Kaipa KN, Bongard JC, Meltzoff AN. Self discovery enables robot social cognition: are you my teacher? Neural Netw. 2010 Oct-Nov;23(8-9):1113-24. doi: 10.1016/j.neunet.2010.07.009. Epub 2010 Aug 8. PMID: 20732790.

See also: Blackiston, D.J., Silva Casey, E., & Weiss, M.R. (2008). “Retention of Memory through Metamorphosis: Can a Moth Remember What It Learned As a Caterpillar?” PLOS ONE, 3(3): e1736.

See also: this fantastic discussion where “Josh Bongard, Tom Froese, and Mike Levin explore the concepts of Irruption Theory, Polycomputing, and the broader implications of these ideas on our understanding of minds and biological systems.”

Meta’s Yann LeCun has some ideas (2022, 2022 paper, 2025).

I am very interested in this approach of narrativity, across domains. If you have interesting papers or resources about it, please share in the comments below.

Kriegman, S., et al. (2020). “A scalable pipeline for designing reconfigurable organisms.” PNAS, 117(4), 1853-1859. Original xenobots paper (Levin & Bongard collaboration)

Thus the “Living things are/not” machines subtheme.

Pfeifer, R., & Bongard, J. (2006). How the Body Shapes the Way We Think: A New View of Intelligence. MIT Press.